Using A/B Testing in Marketing takes center stage, inviting readers on a journey through the realm of data-driven decision-making and optimized marketing strategies. Get ready to dive into the world of A/B testing and its impact on modern marketing campaigns.

A/B testing, a powerful tool in a marketer’s arsenal, allows for strategic testing of variables to enhance conversion rates and drive informed decisions. As we explore the nuances of this technique, you’ll gain valuable insights into its benefits and implementation strategies.

Introduction to A/B Testing

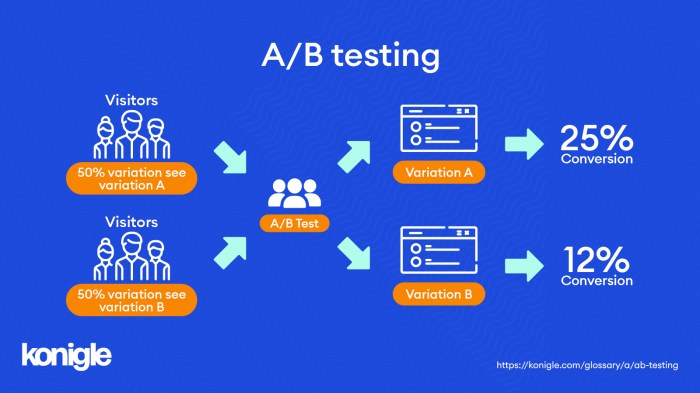

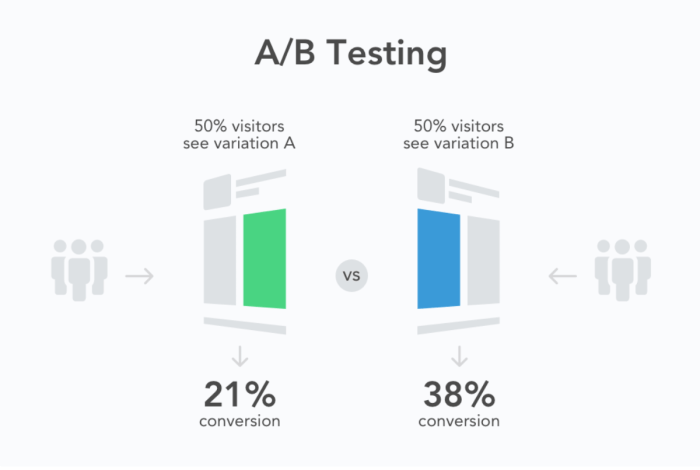

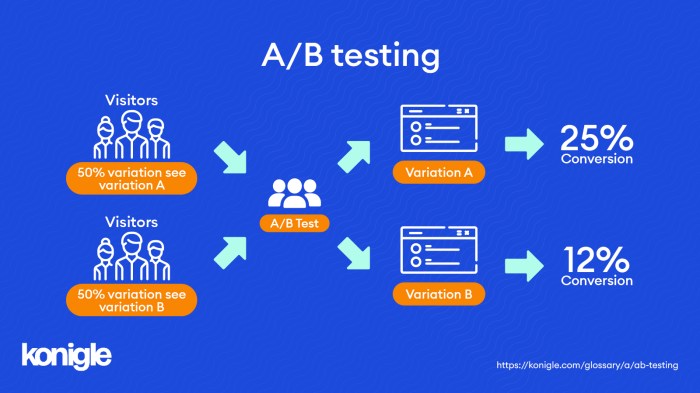

A/B testing is a method used in marketing to compare two versions of a webpage, email, or other marketing asset to determine which one performs better. This testing involves presenting the two versions (A and B) to similar audiences and analyzing which one generates better results.

The purpose of using A/B testing in marketing campaigns is to optimize and improve the effectiveness of marketing strategies. By testing different elements such as headlines, images, call-to-action buttons, or layouts, marketers can identify which version resonates more with their target audience and drives higher conversion rates.

Examples of A/B Testing Improving Marketing Strategies

- Changing the color of a call-to-action button from green to red resulted in a 21% increase in click-through rates.

- Testing different subject lines in email campaigns led to a 15% higher open rate for one variation over the other.

- Modifying the placement of product images on a webpage increased the average time spent on the site by 30%.

Benefits of A/B Testing

A/B testing in marketing comes with a variety of advantages that can significantly impact the success of campaigns and strategies. One of the key benefits is the ability to make data-driven decisions based on concrete results rather than assumptions.

Optimizing Conversion Rates

- A/B testing allows marketers to experiment with different elements of a campaign, such as headlines, images, or call-to-action buttons, to determine which combination yields the highest conversion rates.

- By testing multiple variations simultaneously, marketers can quickly identify what resonates best with their target audience and make informed decisions to optimize conversion rates.

- Optimizing conversion rates through A/B testing can lead to increased sales, higher ROI, and better overall performance of marketing campaigns.

Data-Driven Decision-Making

- With A/B testing, marketers can rely on actual data and results to guide their decisions, rather than relying on guesswork or intuition.

- By analyzing the outcomes of A/B tests, marketers can gain valuable insights into customer preferences, behavior patterns, and trends, enabling them to fine-tune their strategies for better performance.

- Data-driven decision-making facilitated by A/B testing can help marketers allocate resources more effectively, identify areas for improvement, and ultimately drive better business outcomes.

Implementing A/B Testing

When it comes to implementing A/B testing, it’s important to follow a systematic approach to ensure accurate results and meaningful insights for your marketing campaigns.

Setting Up an A/B Test

Setting up an A/B test involves the following steps:

- Determine your goal: Clearly define what you want to achieve with the A/B test, whether it’s to increase click-through rates, improve conversion rates, or optimize user engagement.

- Identify variables: Choose the elements of your campaign that you want to test, such as headlines, call-to-action buttons, images, or layout.

- Create variations: Develop different versions of the selected variables to test against the control group.

- Split your audience: Divide your audience into random and equal segments to ensure a fair test.

- Run the test: Launch the A/B test and monitor the performance of each variation closely.

- Analyze results: Evaluate the data collected during the test to determine which variation performed better based on your goal.

- Implement changes: Apply the insights gained from the A/B test to optimize your marketing campaign.

Selecting the Right Variables to Test

Choosing the right variables to test is crucial for the success of your A/B test. Here are some best practices:

- Focus on high-impact elements: Prioritize testing variables that are likely to have a significant impact on your campaign’s performance.

- Limit the number of variables: Test one element at a time to accurately attribute any changes in results to that specific variable.

- Consider your goal: Select variables that directly align with your testing objectives to gather relevant insights.

- Ensure statistical significance: Collect enough data to make statistically valid conclusions about the performance of each variation.

Best Practices for Running Successful A/B Tests

To run successful A/B tests, consider the following best practices:

- Set clear objectives: Define specific goals for your A/B test to guide your testing strategy and interpretation of results.

- Follow a structured process: Establish a standardized process for conducting A/B tests to maintain consistency and reliability.

- Monitor performance metrics: Track key performance indicators throughout the test to identify trends and make informed decisions.

- Iterate and optimize: Continuously refine your testing approach based on learnings from previous tests to improve future campaigns.

Analyzing A/B Testing Results

When it comes to analyzing A/B testing results, it’s crucial to look beyond just the numbers. Understanding the data and drawing meaningful insights is key to making informed decisions for your marketing strategies.

Interpreting A/B Test Results

- Compare Key Metrics: Look at important metrics such as conversion rates, click-through rates, bounce rates, and other relevant KPIs for both variations A and B.

- Statistical Significance: Ensure that the results are statistically significant to draw reliable conclusions. Use tools like p-values and confidence intervals to determine significance.

- Segment Analysis: Break down the results by different segments such as demographics, location, or device type to identify any patterns or trends.

- User Behavior: Dive deep into user behavior data to understand how users interacted with each variation and why one performed better than the other.

Common Pitfalls in Analyzing A/B Testing Data

- Ignoring Statistical Significance: Drawing conclusions without statistical significance can lead to inaccurate decisions.

- Overlooking Segmentation: Failing to analyze results by segments can mask important insights that can improve targeting and personalization.

- Biased Interpretation: Being influenced by preconceived notions or expectations can skew the interpretation of results. Stay objective.

- Not Considering External Factors: External factors like seasonality or market trends can impact results, so it’s important to take them into account.

Making Informed Decisions based on A/B Testing Outcomes

- Iterative Testing: Use A/B testing as a continuous process to refine and optimize your marketing strategies over time.

- Implement Learnings: Apply the insights gained from A/B tests to other areas of your marketing campaigns for better overall performance.

- Document Results: Keep a record of all A/B testing outcomes and learnings to inform future decisions and avoid repeating past mistakes.

- Collaborate: Involve cross-functional teams in the analysis process to gain diverse perspectives and ensure comprehensive decision-making.

Case Studies: Using A/B Testing In Marketing

Case studies provide valuable insights into the impact of A/B testing on marketing campaigns. By analyzing real-life examples, we can uncover key takeaways and best practices for implementing successful A/B tests.

E-commerce Website Redesign

One prominent case study involves an e-commerce website that conducted A/B testing on different versions of its homepage. By testing variations in layout, color schemes, and call-to-action buttons, the company was able to identify the most effective design for increasing conversion rates. The A/B test revealed that a simple, clean layout with a prominent “Shop Now” button resulted in a significant boost in sales compared to the original design.

Email Marketing Campaign Optimization, Using A/B Testing in Marketing

Another case study focused on optimizing email marketing campaigns through A/B testing. By testing variations in subject lines, content, and send times, a company was able to improve open rates and click-through rates. The A/B test showed that personalized subject lines and concise content led to higher engagement among subscribers, ultimately driving more conversions.